In today’s episode of “things conservatives made up themselves” we have the ‘woke’ evolution of ChatGPT.

All across the internet, conservative media is panicking about the concept that AI is biased.

And they’re right. Experts have warned for years that systems built on machine-learning, like ChatGPT, and facial recognition software are in fact biased.

But they’re biased against minorities, not conservatives.

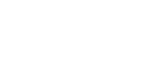

The National Review started the furor by publishing a piece accusing ChatGPT of being “woke” after it would not explain why drag queen story hour is “bad.”

It prompted conservatives to test the ChatGPT program with questions needling it for “woke” answers.

But what they’re missing is the fact that ChatGPT’s inability to spout racism or anti-transgender sentiment is the result of years of careful programming to avoid anti-minority bias.

AI ethics requires programmers to block certain outputs to ensure that the AI is not perpetuating harm against vulnerable minorities.

Basically, the programmers had to make a choice – and it would seem that the choice they made to do the least harm does not align with far-right beliefs.

the chatgpt AI is only getting started

Cant wait to see the progression of this company. It’s going to be amazing

This is going to bring so much profit to whoever implements it first, google or windows

NO MORE ESSAYS WITH CHATGPT